44 posts tagged with "AWS"

View All TagsSecure EC2 Private Subnet Access Without Bastion Hosts - Save Costs

Introduction

Understanding AWS Account Migration: A Step-by-Step Guide

Hello everyone! In today's blog, we'll explore how to invite an AWS management account that is already part of another organization into a new organization. This process can be a bit tricky, but we'll walk you through it step by step. Let's get started!

Simplifying AWS Notifications: A Guide to User Notifications

Expert Guide to Cloud Architecture: Tips for Aspiring Architects

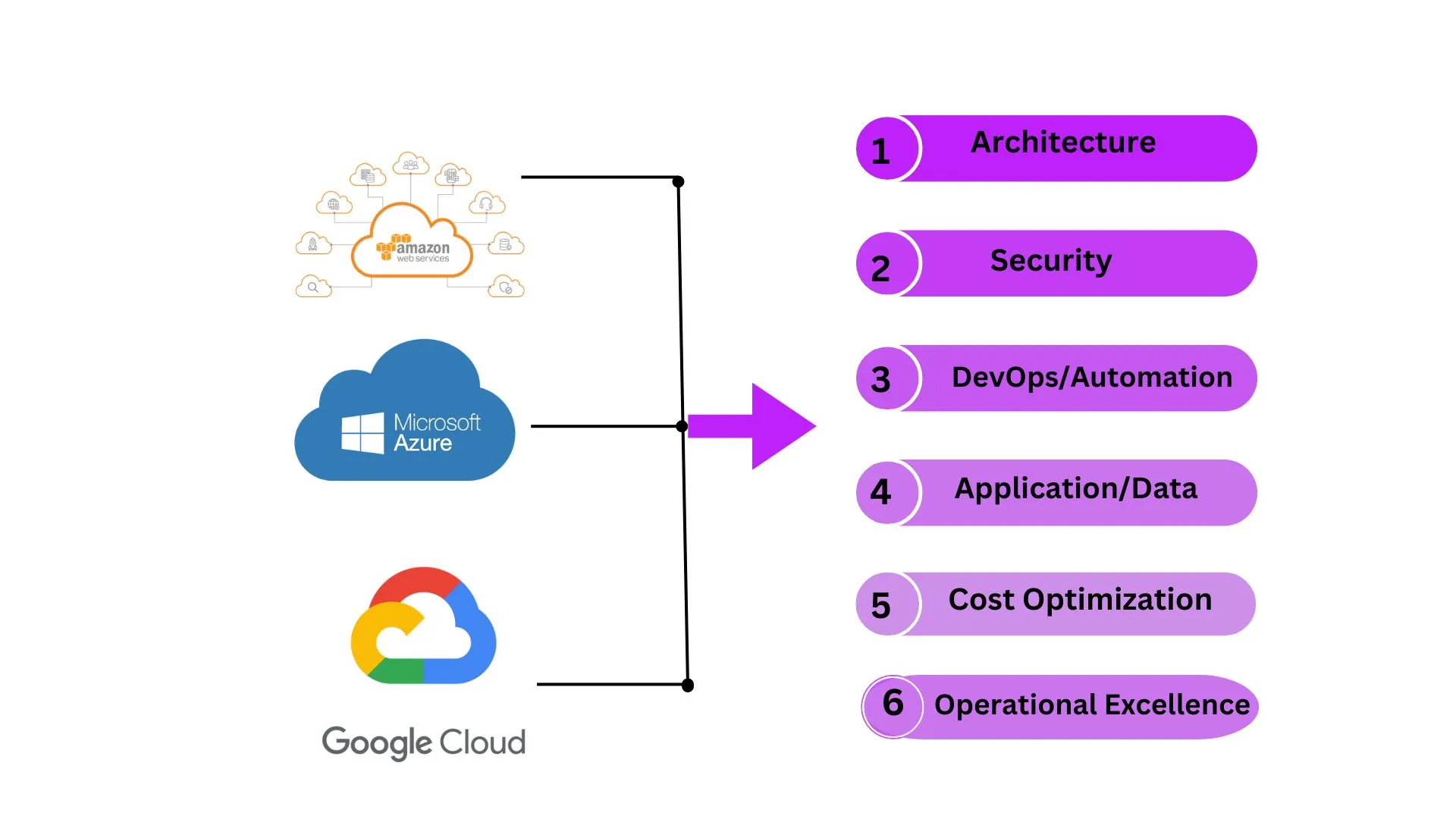

To become a good cloud architect it's important to understand the essential pillars that support a well-architected framework. This framework helps in designing, deploying, and maintaining cloud applications efficiently. Here are some of the key pillars and insights from our experience at Arena Technologies.

Comprehensive Guide to Centralized Backups in AWS Organizations

AWS services that support Containers: Containers!=Kubernetes.

When it comes to choosing the right container service for your application, AWS offers a myriad of options, each tailored to specific needs and use cases. This guide aims to provide a comprehensive overview of when and how to use various AWS container services, based on our extensive research and industry experience.

Please refer The Ultimate AWS ECS and EKS Tutorial

One Bucket, One Key: Simplify Your Cloud Storage!

In today's cloud-centric environment, data security is more crucial than ever. One of the common challenges faced by organizations is ensuring that sensitive data stored in AWS S3 buckets is accessible only under strict conditions. This blog post delves into a hands-on session where we set up an AWS Key Management Service (KMS) policy to restrict access to a single S3 bucket using a customer's own encryption key.

A Detailed Overview Of AWS SES and Monitoring - Part 2

In our interconnected digital world, managing email efficiently and securely is a critical aspect of business operations. This post delves into a sophisticated setup using Amazon Web Services (AWS) that ensures your organization's email communication remains robust and responsive. Specifically, we will explore using AWS Simple Email Service (SES) in conjunction with Simple Notification Service (SNS) and AWS Lambda to handle email bounces and complaints effectively.

Desirable Techniques: Understanding Modern Messaging with ActiveMQ and ActiveMQ Artemis