In the digital age, a Center of Excellence (CoE) plays a pivotal role in guiding organizations through complex technology transformations, ensuring that they remain competitive and agile. With the expertise to foster collaboration, streamline processes, and deliver sustainable outcomes, a CoE brings together people, processes, and best practices to help businesses meet their strategic goals.

In this blog, we will explore the essentials of establishing a CoE, including definitions, focus areas, models, and practical strategies to maximize return on investment (ROI).

What is a Center of Excellence?

A Center of Excellence (CoE) is a dedicated team within an organization that promotes best practices, innovation, and knowledge-sharing around a specific area, such as cloud, enterprise architecture, or microservices. By centralizing expertise and resources, a CoE enhances efficiency, consistency, and quality across the organization, addressing challenges and promoting cross-functional collaboration.

According to Jon Strickler from Agile Elements, a CoE is "a team of people that promotes collaboration and uses best practices around a specific focus area to drive business results." This guiding principle is echoed by Mark O. George in his book The Lean Six Sigma Guide to Doing More with Less, which defines a CoE as a "team that provides leadership, evangelization, best practices, research, support, and/or training."

Why Establish a CoE?

Organizations invest in CoEs to improve efficiency, foster collaboration, and ensure that projects align with corporate strategies. By establishing a CoE, businesses can:

- Streamline Processes: CoEs develop standards, methodologies, and tools that reduce inefficiencies, enhancing the delivery speed and quality of technology initiatives.

- Enhance Learning: Through shared learning resources, training, and certifications, CoEs help team members stay current with best practices and evolving technology.

- Increase ROI: CoEs facilitate better resource allocation and help companies achieve economies of scale, thereby maximizing ROI.

- Provide Governance and Support: As an approval authority, a CoE maintains quality and compliance, ensuring that projects align with organizational values and goals.

Core Focus Areas of a CoE

CoEs can cover a variety of functions, depending on organizational needs. Typical focus areas include:

- Planning and Leadership: Defining the vision, strategy, and roadmap to align technology initiatives with business objectives.

- Guidance and Support: Creating standards, tools, and knowledge repositories to support teams throughout the project lifecycle.

- Learning and Development: Offering training, certifications, and mentoring to ensure continuous skill enhancement.

- Asset Management: Managing resources, portfolios, and service lifecycles to prevent redundancy and optimize resource utilization.

- Governance: Acting as the approval body for initiatives, maintaining alignment with business priorities, and coordinating across business units.

Steps to Implement a CoE

Define Clear Objectives and Roles

Start by setting a clear mission and objectives that align with the organizations strategic goals. Design roles for core team members, including:

- Technology Governance Lead: Ensures that technology aligns with organizational goals.

- Architectural Standards Team: Develops and enforces standards and methodologies.

- Technology Champions: Subject-matter experts who provide mentorship and support.

2. Identify Success Metrics

Metrics are essential for measuring a CoEs impact. Examples include:

- Service Metrics: Cost efficiency, development time, and defect rates.

- Operations Metrics: Incident response time and resolution rates.

- Management Metrics: Project success rates, certification levels, and adherence to standards.

3. Develop Standards and Best Practices

Establish standards as a foundation for quality and efficiency. Document best practices and create reusable frameworks to ensure consistency across departments.

4. Create a Knowledge Repository

A centralized knowledge hub allows easy access to documentation, tools, and other resources, promoting continuous learning and collaboration across teams.

5. Focus on Training and Certification

Keeping team members updated on current best practices is crucial. Regular training and certifications validate the skills required to execute projects effectively.

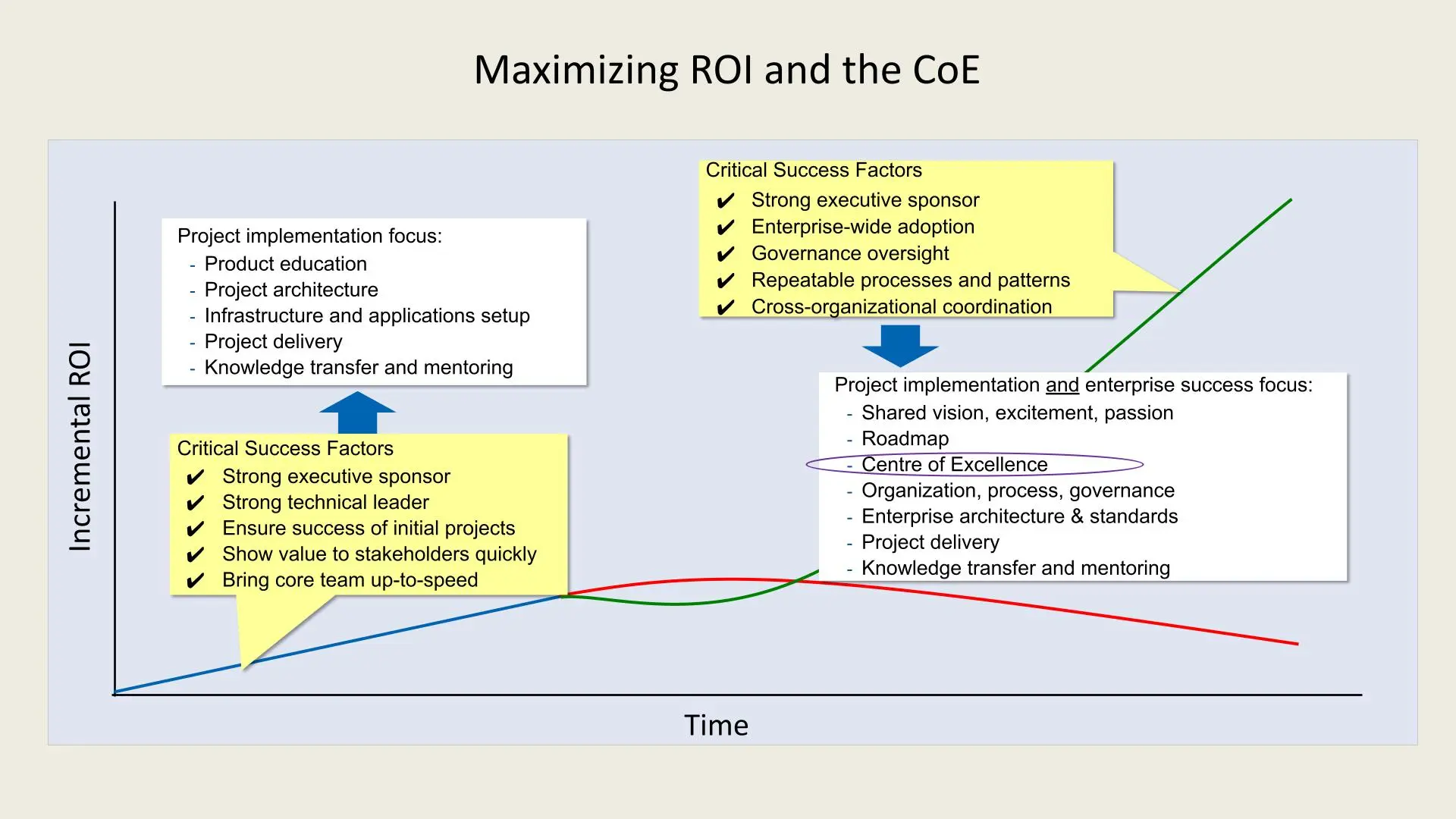

Maximizing ROI with a CoE

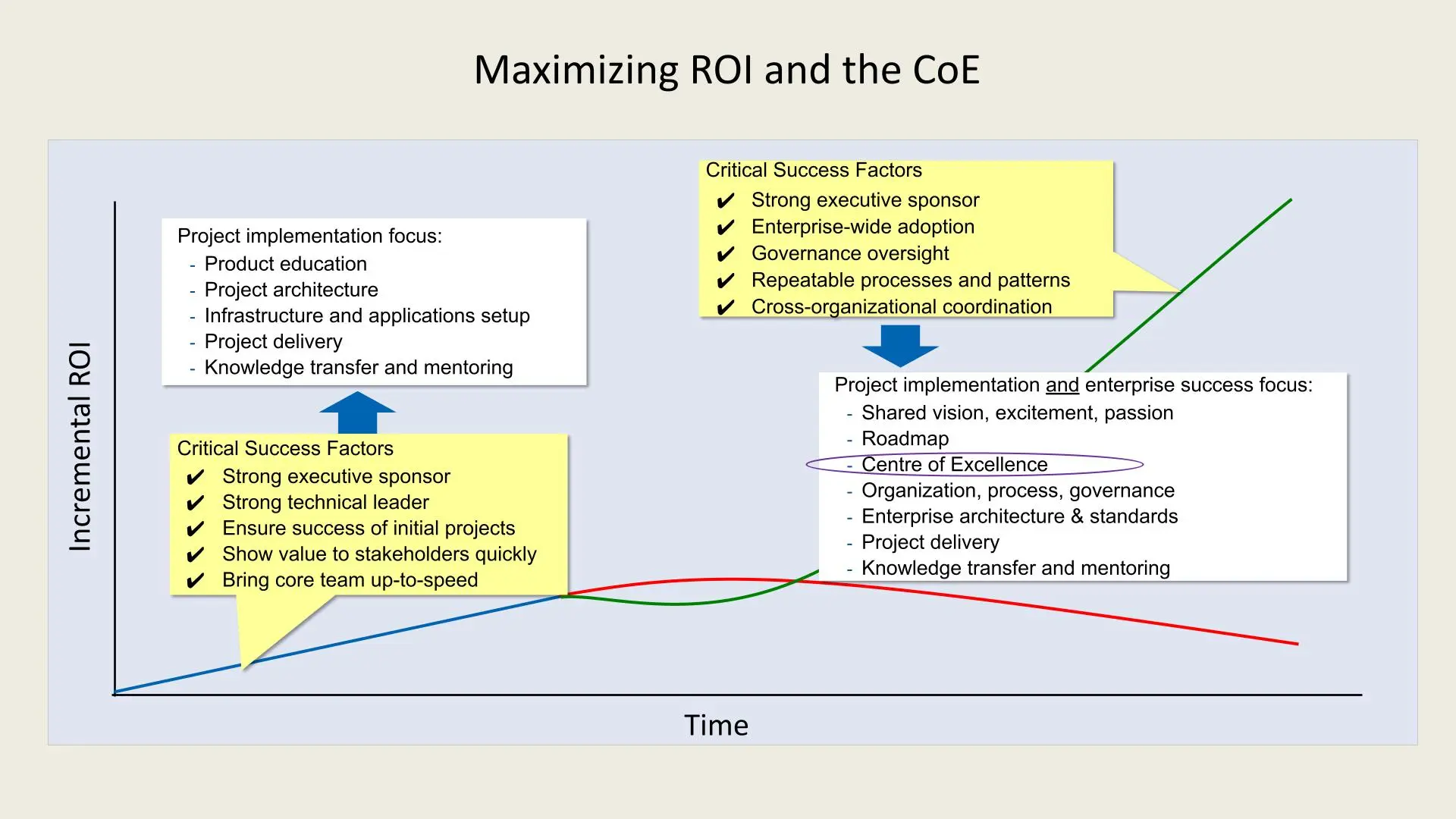

1. Project Implementation Focus:

- To establish a successful CoE, the initial focus must include:

- Product Education: Ensuring the team understands and is skilled in relevant technologies and methodologies.

- Project Architecture: Defining a robust architecture that can support scalability and future needs.

- Infrastructure and Applications Setup: Setting up reliable infrastructure and integrating applications to support organizational goals.

- Project Delivery: Ensuring projects are delivered on time and within budget.

- Knowledge Transfer and Mentoring: Facilitating the sharing of knowledge and skills across teams to build long-term capabilities.

2. Critical Success Factors:

- Strong Executive Sponsor: Having a high-level executive who champions the CoE initiative is crucial for securing resources and alignment with organizational goals.

- Strong Technical Leader: A technically skilled leader is essential to drive the vision and make informed technical decisions.

- Initial Project Success: Early wins are essential to build confidence in the CoE framework and showcase its value.

- Value to Stakeholders: Demonstrating quick wins to stakeholders builds trust and secures continued support.

- Core Team Development: Bringing the core team up to speed ensures that they are equipped to handle responsibilities efficiently.

3. Scaling and Sustaining Success:

Once the foundation is established, the CoE must focus on broader organizational success, including:

- Shared Vision and Passion: A CoE thrives when it aligns with the organization's vision and ignites excitement among team members.

- Roadmap Development: A clear, strategic roadmap helps the CoE stay aligned with organizational goals and adapt to changes.

- Cross-organizational Coordination: Ensuring collaboration and coordination across different departments fosters a cohesive approach.

- Governance Oversight: Governance mechanisms help standardize processes, enforce policies, and maintain quality across projects.

4. Long-term ROI Goals:

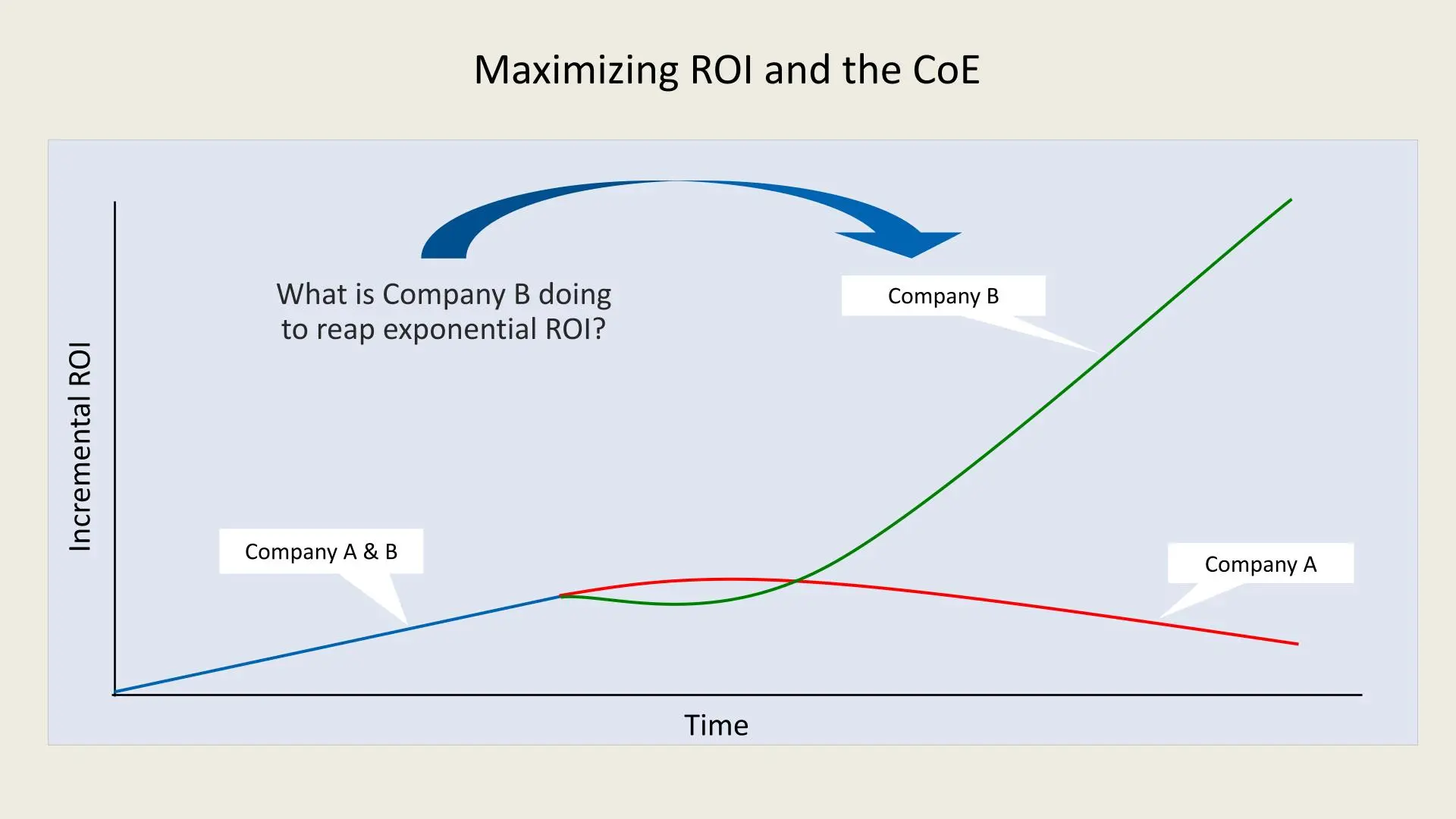

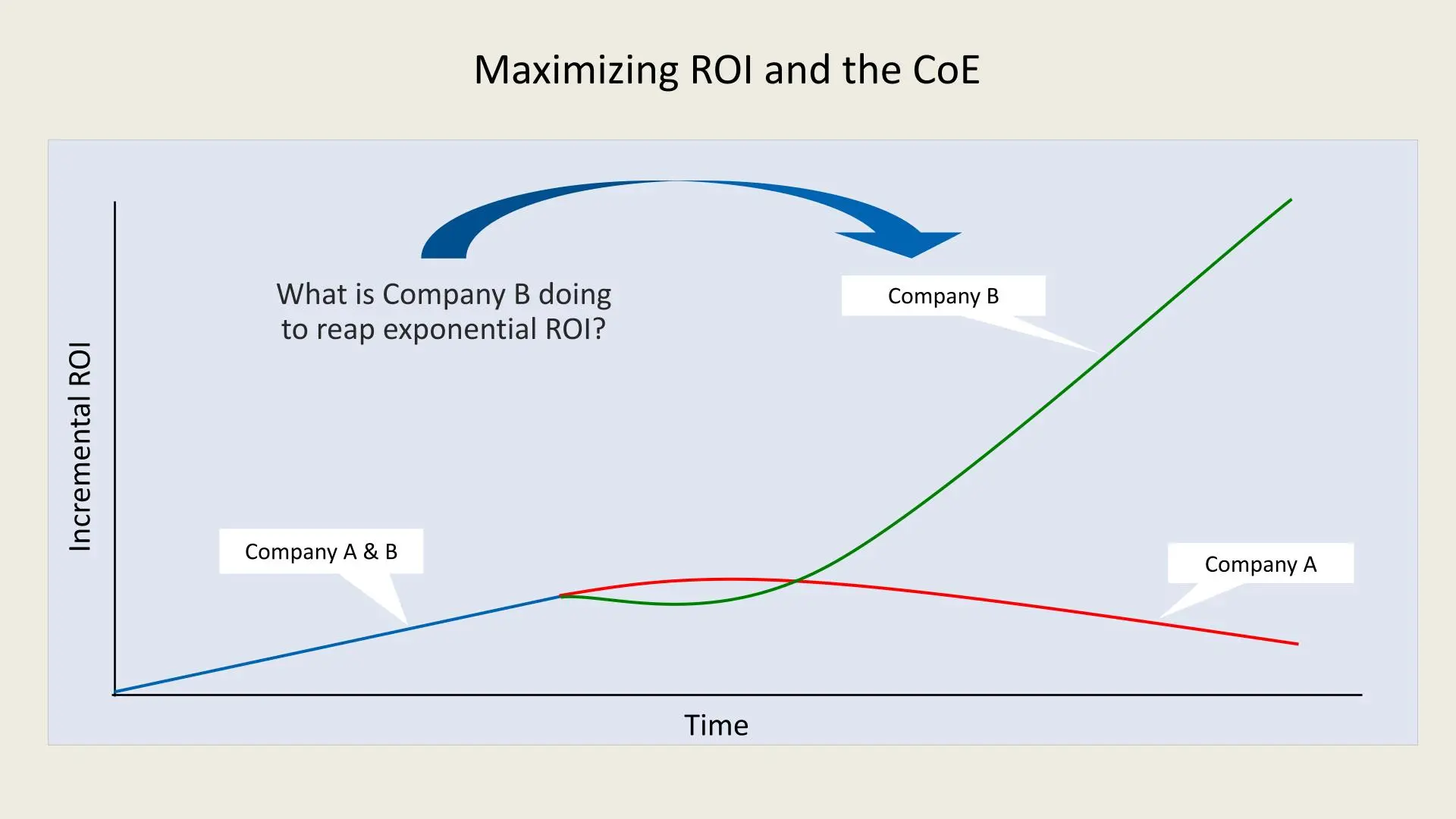

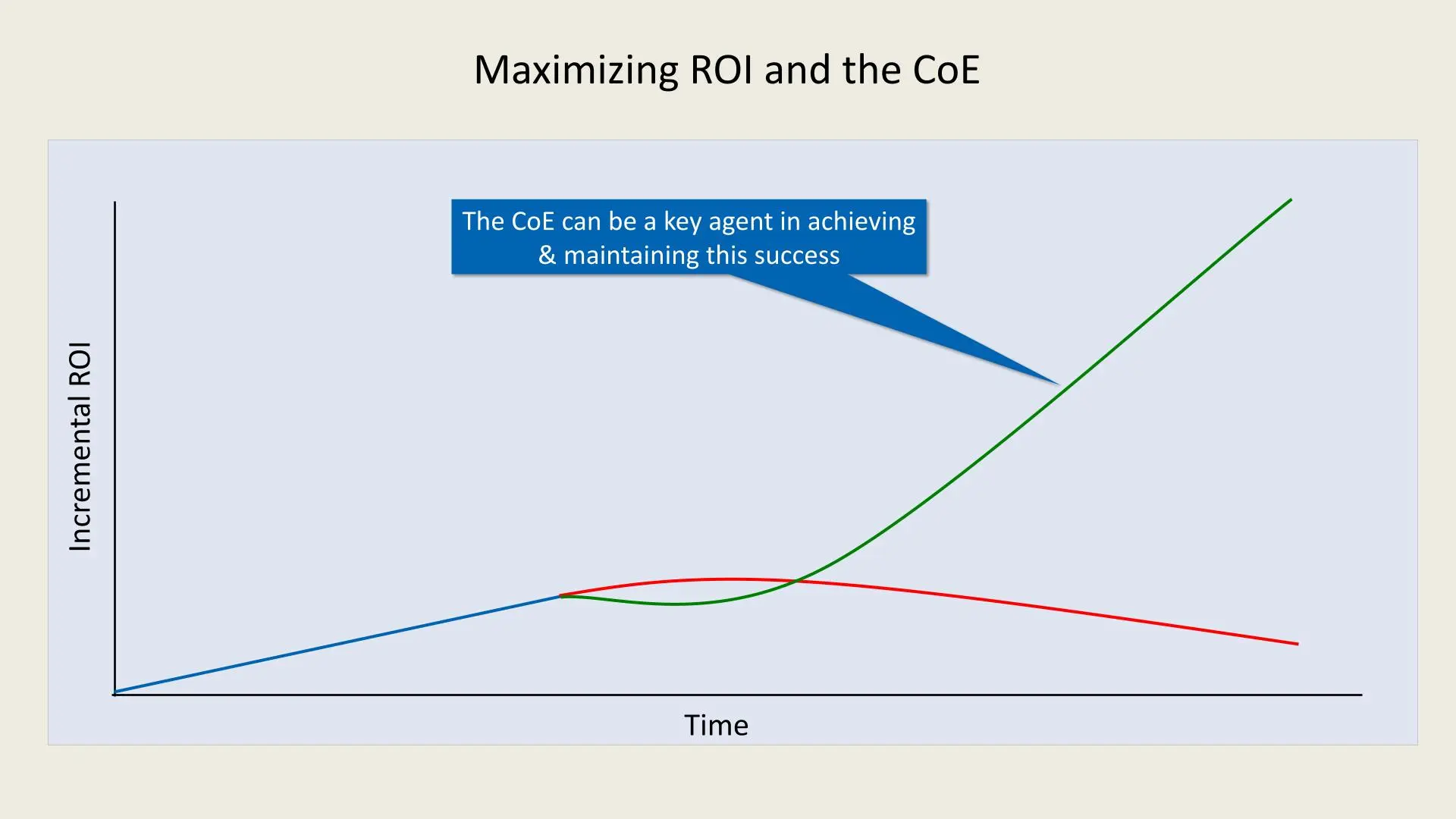

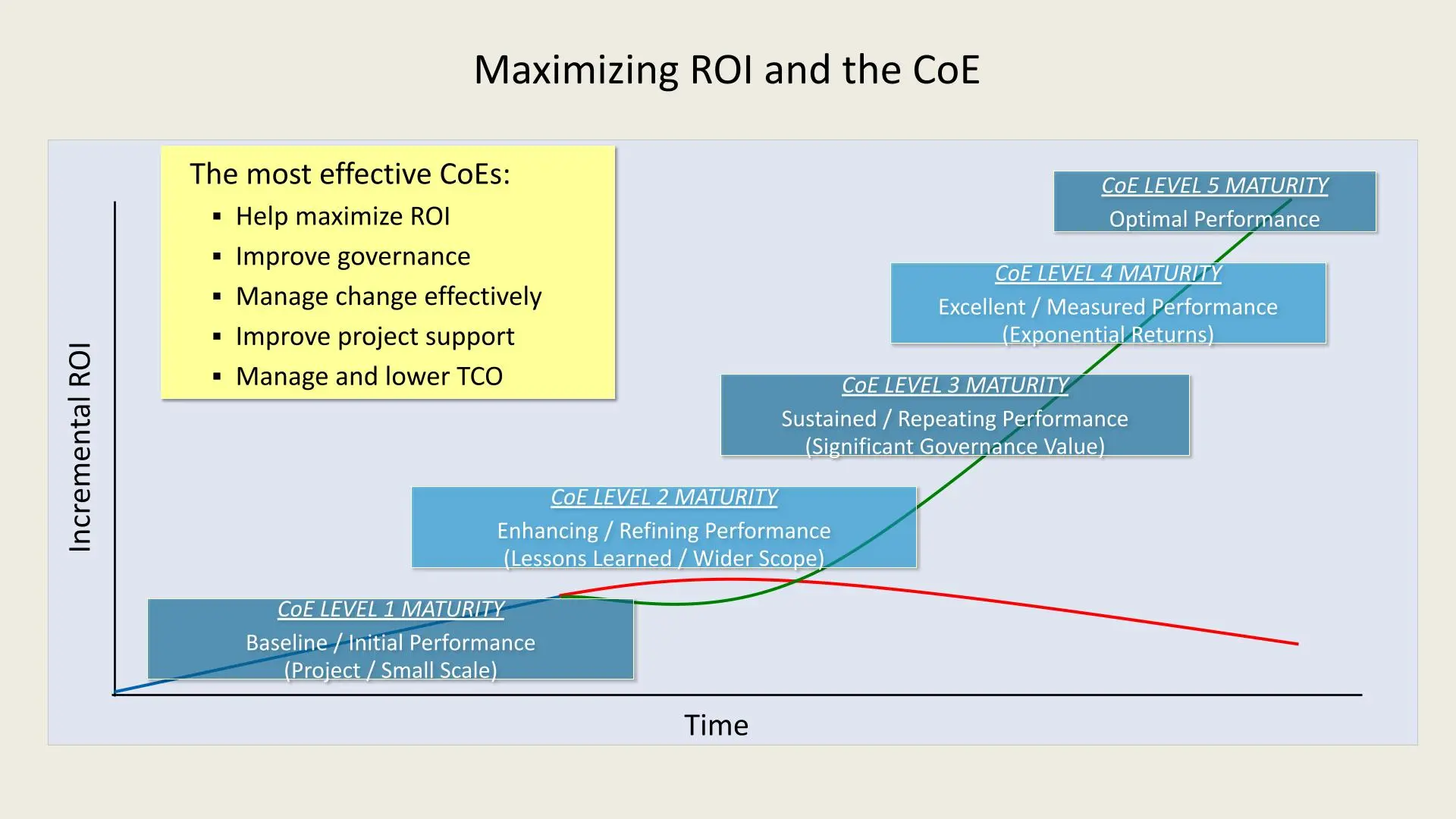

A mature CoE leads to optimized processes, minimized costs, and significant ROI growth. By integrating repeatable processes, organizational knowledge, and governance, the CoE helps sustain performance improvement, which is reflected by the green curve in the chart.

Key Takeaways:

- Structured Approach: Company B benefits from a CoE that provides structure, standardized governance, and shared knowledge across projects, enabling it to scale efficiently.

- Exponential Growth: With a CoE in place, Company B experiences exponential growth in ROI as the organization matures, capturing more value from its initiatives.

- Sustainable Performance: A CoE helps maintain high performance by adapting to evolving business needs, ensuring continuous improvement, and maximizing the value derived from investments.

Maximizing ROI with a CoE

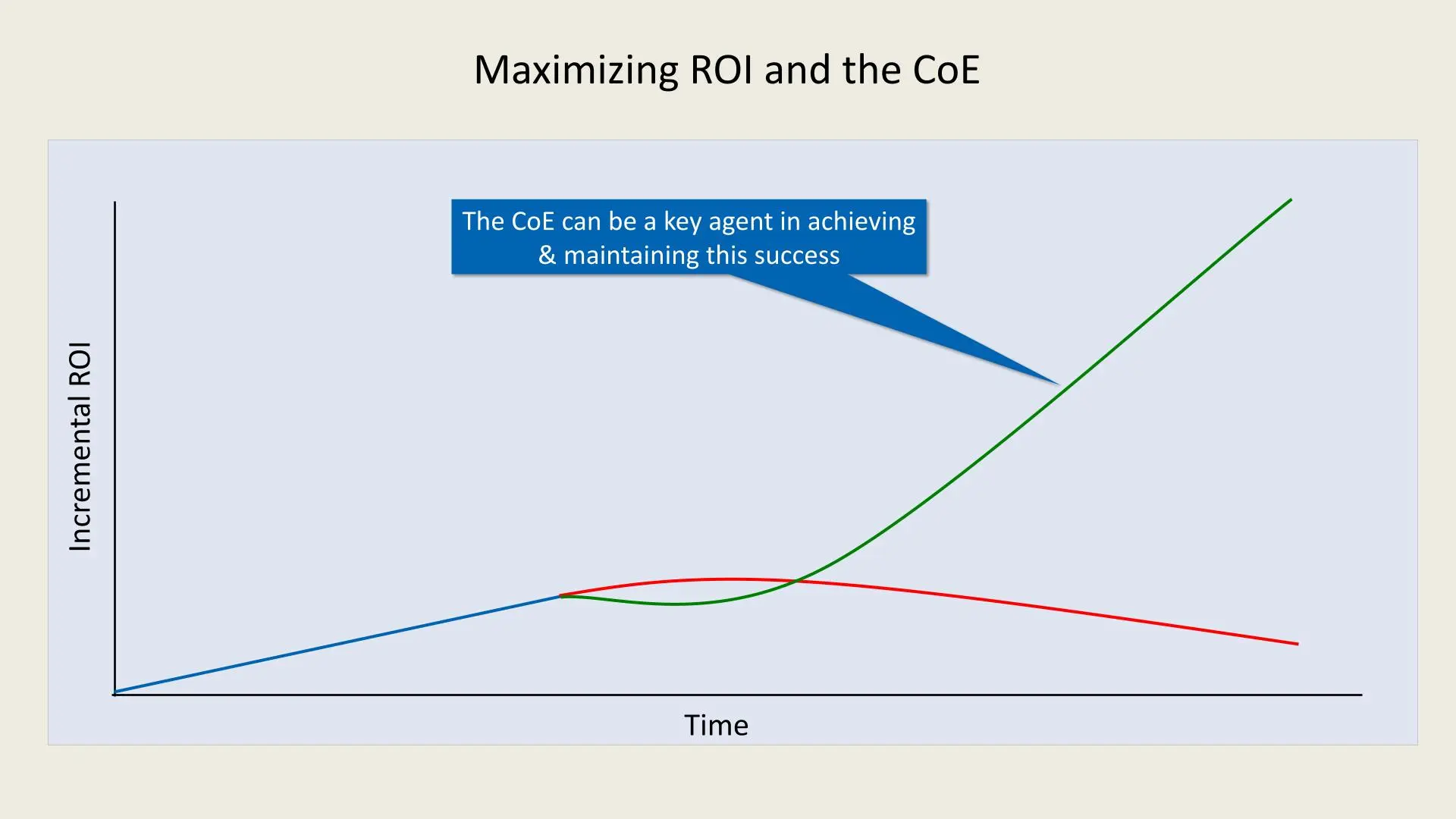

In the chart, Company A and Company B start with similar levels of incremental ROI. However, as time progresses, the ROI for Company A plateaus and even begins to decline, as represented by the red line. This suggests that without a structured CoE, organizations may struggle to sustain growth and consistently achieve high returns due to a lack of standardized practices, governance, and strategic alignment.

On the other hand, Company B, which has implemented a CoE, follows the green line that shows exponential ROI growth. The structured and mature CoE within Company B ensures that best practices, continuous improvement, and cross-functional collaboration are maintained. This leads to sustained, repeatable performance and eventually optimal ROI.

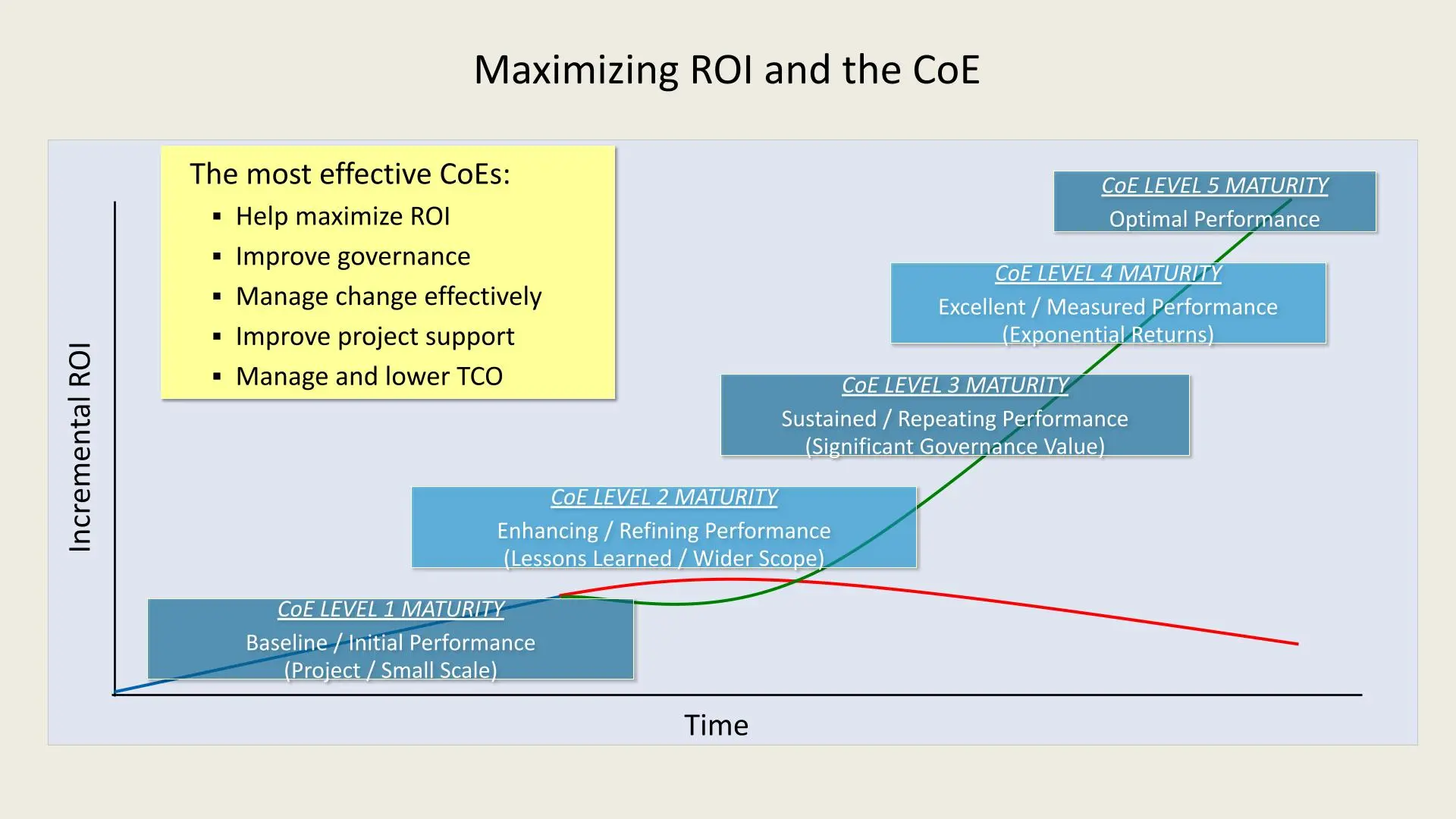

CoE Maturity Levels and Their ROI Impact

Level 1 Maturity 1. Baseline/Initial Performance:

- Initial small-scale projects define this stage.

- ROI is relatively low as processes and standards are still under development.

Level 2 Maturity 1. Enhancing/Refining Performance:

- The CoE begins to refine its approach, learning from initial projects.

- Wider scope and incremental improvements lead to better ROI.

Level 3 Maturity 1. Sustained/Repeating Performance:

- At this stage, CoEs establish repeatable processes with substantial governance.

- This results in steady and significant improvements in ROI.

Level 4 Maturity 1. Excellent/Measured Performance:

- Performance becomes measurable, and returns become exponential.

- The CoEs processes are well-governed, supporting growth and optimizing costs.

Level 5 Maturity 1. Optimal Performance:

- The CoE reaches optimal performance, where ROI is maximized and sustained.

- Continuous improvements and strategic insights drive ongoing success.

Key Benefits of Effective CoEs

The most impactful CoEs:

- Maximize ROI: By implementing best practices and fostering collaboration, CoEs significantly increase ROI.

- Improve Governance: They establish structured processes and compliance, ensuring smoother operations.

- Manage Change Effectively: CoEs play a pivotal role in managing transitions and adapting to new technologies.

- Improve Project Support: They enhance support for various initiatives across the organization.

- Lower Total Cost of Ownership (TCO): By optimizing resources and eliminating redundancies, CoEs reduce operational costs.

Core Focus Areas of a CoE

- Planning and Leadership: Outlining a strategic roadmap, managing risks, and setting a vision.

- Guidance and Support: Establishing standards, tools, and methodologies.

- Shared Learning: Providing education, certifications, and skill development.

- Measurements and Asset Management: Using metrics to demonstrate CoE value and managing assets effectively.

- Governance: Ensuring investment in high-value projects and creating economies of scale.

The Most Valuable Functions of a Center of Excellence (CoE)

In today's rapidly evolving technology landscape, organizations are increasingly leveraging Centers of Excellence (CoEs) to drive digital transformation, manage complex projects, and foster innovation. But what functions make a CoE truly valuable? According to a Forrester survey, the highest-impact CoE functions go beyond technical training, emphasizing governance, leadership, and vision. In this post, we will break down the essential functions of a CoE and explore why they are crucial to an organizations success.

The Role of Governance in CoE Success

The first step in understanding a CoE's value is to recognize its role as a governance body rather than just a training entity. According to Forrester's survey results, having a CoE correlates with higher satisfaction levels with cloud technologies and other technological initiatives. CoEs primarily provide value through leadership and governance, which guides organizations in making informed decisions and maintaining a strategic focus.

Key points include:

- Higher Satisfaction: Organizations with CoEs report better satisfaction with their technological initiatives.

- Focus on Leadership: Rather than detailed technical skills, CoEs drive value by establishing a leadership framework.

- Governance First, Training Second: The CoE should primarily be seen as a governance body, shaping organizational policy and direction.

Key Functions of a CoE

A successful CoE is defined by several core functions that help align organizational goals, foster innovation, and ensure effective project management. Here are some of the most valuable functions, as highlighted in Forresters survey:

-

Creating & Maintaining Vision and Plans

CoEs provide a broad vision and ensure that all stakeholders are aligned. This includes setting a strategic direction for technology initiatives to keep everyone on track.

-

Acting as a Governance Body

A CoE provides approval on key decisions, giving it a strong leadership position. This approval process acts as a mentorship tool and ensures that guidance is followed effectively.

-

Managing Patterns for Implementations

By creating and managing implementation patterns, CoEs make it easier for teams to follow established best practices, reducing the need for reinventing solutions.

-

Portfolio Management of Services

CoEs organize services and tools to facilitate their use across the organization. This management helps streamline workflows, often using resources like spreadsheets, registries, and repositories.

-

Planning for Future Technology Needs

A CoE avoids the risk of each team working in silos by setting a long-term plan for technology evolution, ensuring cohesive growth that aligns with the organization's goals.

Centers of Excellence (CoEs) are powerful assets that can significantly enhance an organization's capability to manage and implement new technologies effectively. By focusing on governance and leadership rather than technical skills alone, CoEs bring the organization closer to achieving its strategic vision. Whether it's managing service portfolios or creating a cohesive plan for future technologies, CoEs provide indispensable guidance in today's fast-paced, tech-driven world.

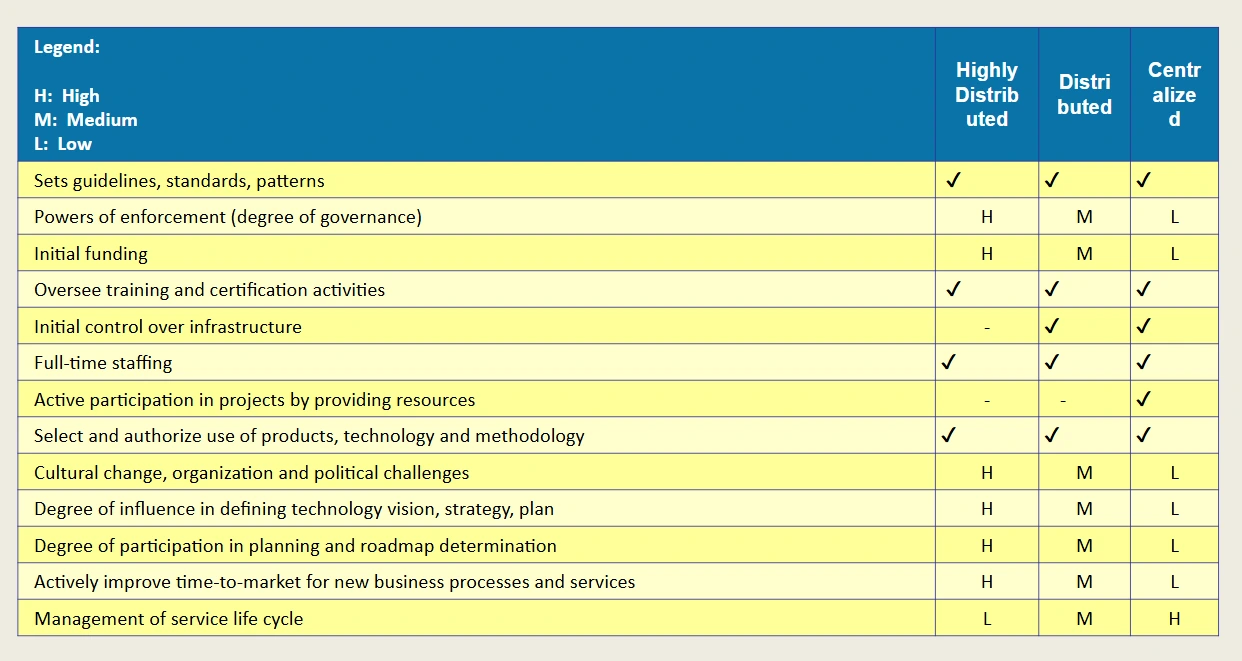

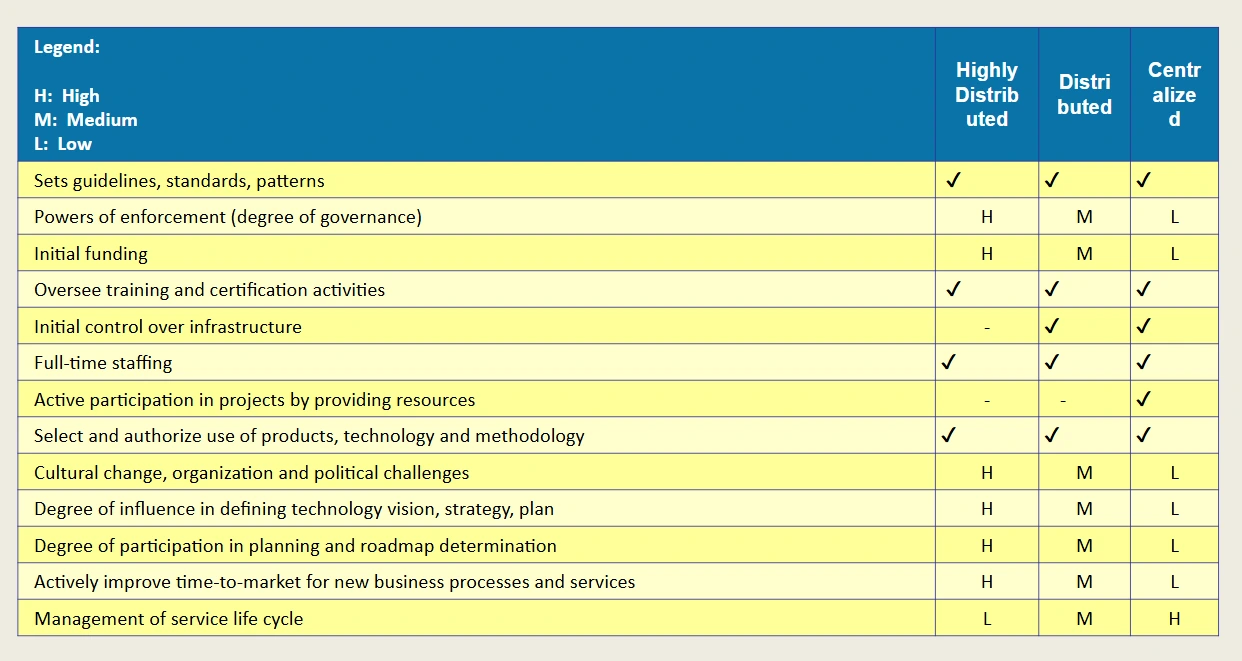

Types of CoE Models

- Centralized Model (Service Center): Best suited for strong governance and standards.

- Distributed Model (Support Center): Allows for flexibility and faster adoption.

- Highly Distributed Model (Steering Group): Minimal staffing, ideal for independent business unit support.

The structure of a CoE varies based on organizational size and complexity. Here are three primary models:

1. Centralized Model

In this model, the CoE operates as a single, unified entity. It manages all technology-related practices and provides support to the entire organization.

Pros:

- Easier Governance: Centralized models streamline oversight and standardization.

- Simple Feedback Loops: By centralizing processes, this model enables more efficient communication and rapid issue resolution.

Cons:

- Limited Flexibility: The centralized model may struggle to meet the diverse needs of larger organizations.

CoE & E-Strategy

For a CoE to evolve and meet organizational goals, it must continuously:

- Evangelize: Promote new strategies and state-of-the-art practices.

- Evolve: Adapt frameworks and processes as technology and business needs change.

- Enforce: Ensure adherence to standards and guidelines.

- Escalate: Address and resolve governance challenges effectively.

2. Distributed Model

Here, each department has its own CoE, allowing teams to tailor best practices to their unique requirements.

Pros:

- Adaptable to Specific Needs: Each department can quickly adopt and adapt standards to suit its goals.

- Scalable: The distributed model grows more effectively with the organization.

Cons:

- Higher Complexity: Governance and coordination become challenging, especially across multiple CoEs.

3. Highly Distributed Model

In a highly distributed setup, the CoE functions as a flexible steering group, with minimal centralized authority. This model is particularly effective in global enterprises with varied business needs.

Pros:

- High Flexibility: This model meets the unique requirements of diverse business units.

- Adaptable to Large Organizations: It supports scalability and regional differences effectively.

Cons:

- Complex Governance: Managing coherence across different units requires robust oversight mechanisms.

Typical CoE model characteristics

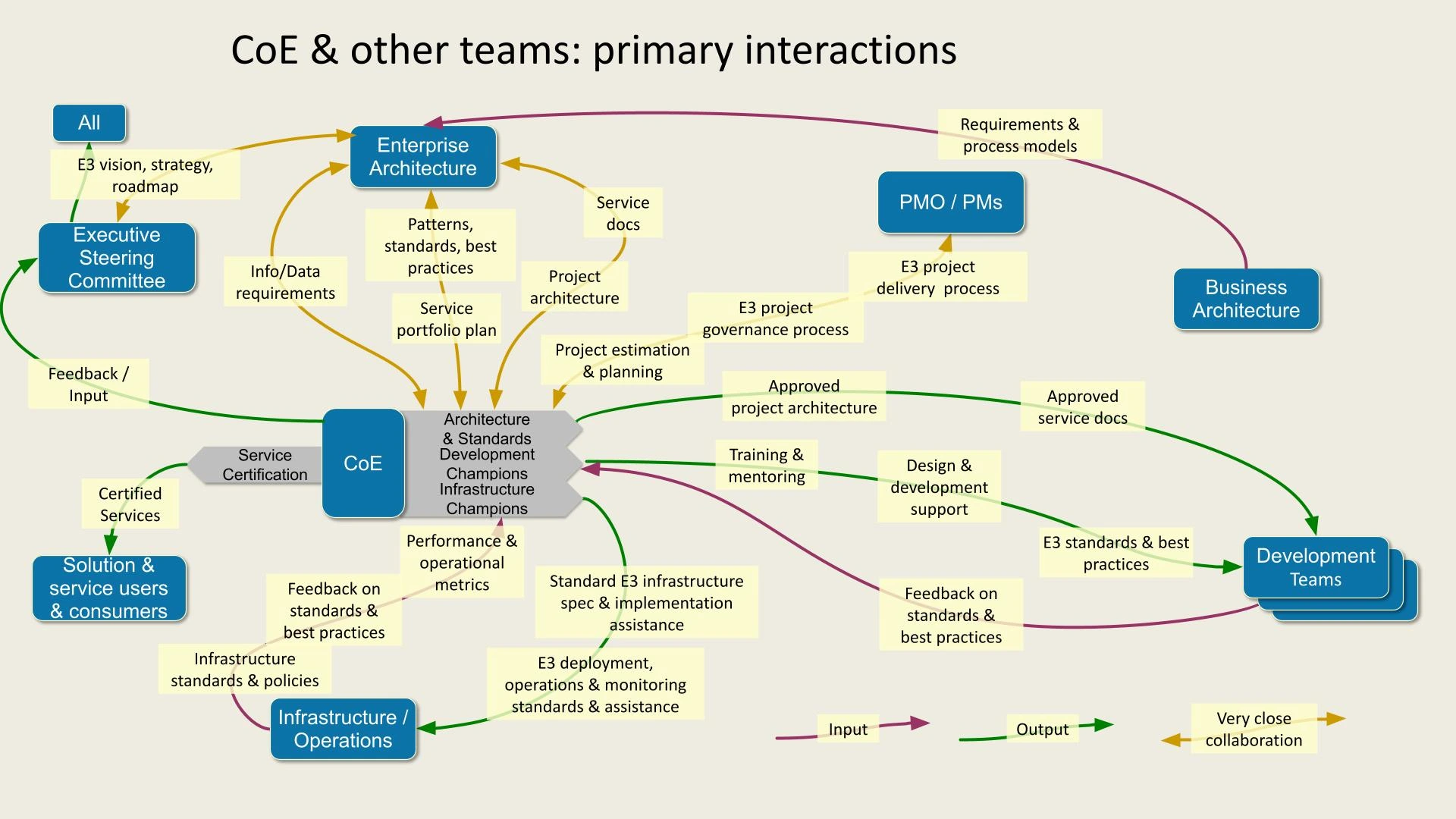

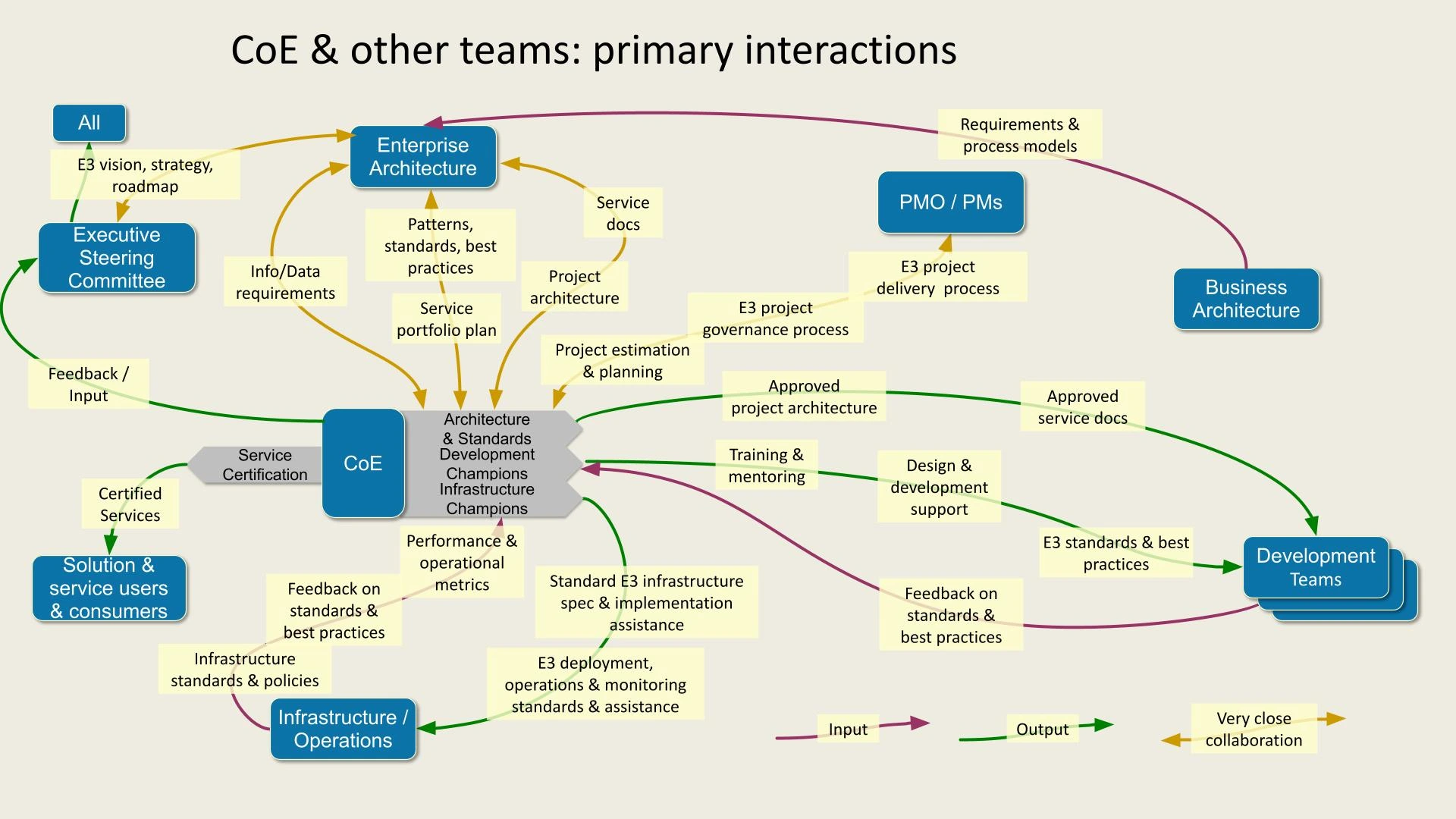

The diagram depicts the primary interactions between the Center of Excellence (CoE) and various teams:

- Executive Steering Committee: Provides E3 vision, strategy, and roadmap, and receives feedback/input.

- Enterprise Architecture: Collaborates with CoE on patterns, standards, and best practices, providing project architecture and service portfolio plans.

- PMO/Project Managers: Oversee project governance, requirements, and process models.

- Business Architecture: Supplies approved service documents and E3 project delivery process support.

- Development Teams: Receive E3 standards, training, and approved service docs for design and development.

- Infrastructure/Operations: Ensures infrastructure standards, operations support, and feedback on best practices.

- Solution & Service Users: Receive certified services and provide input.

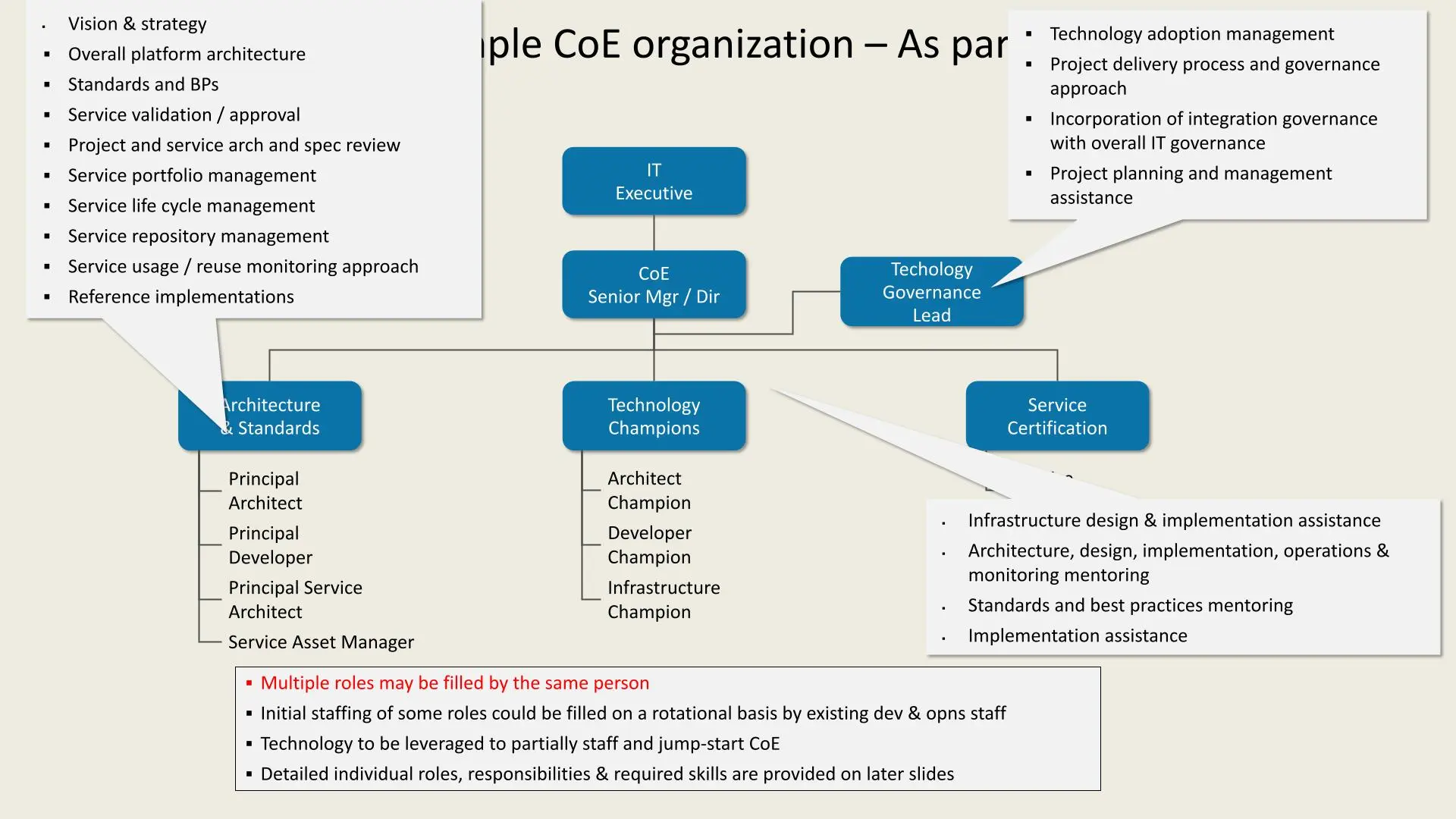

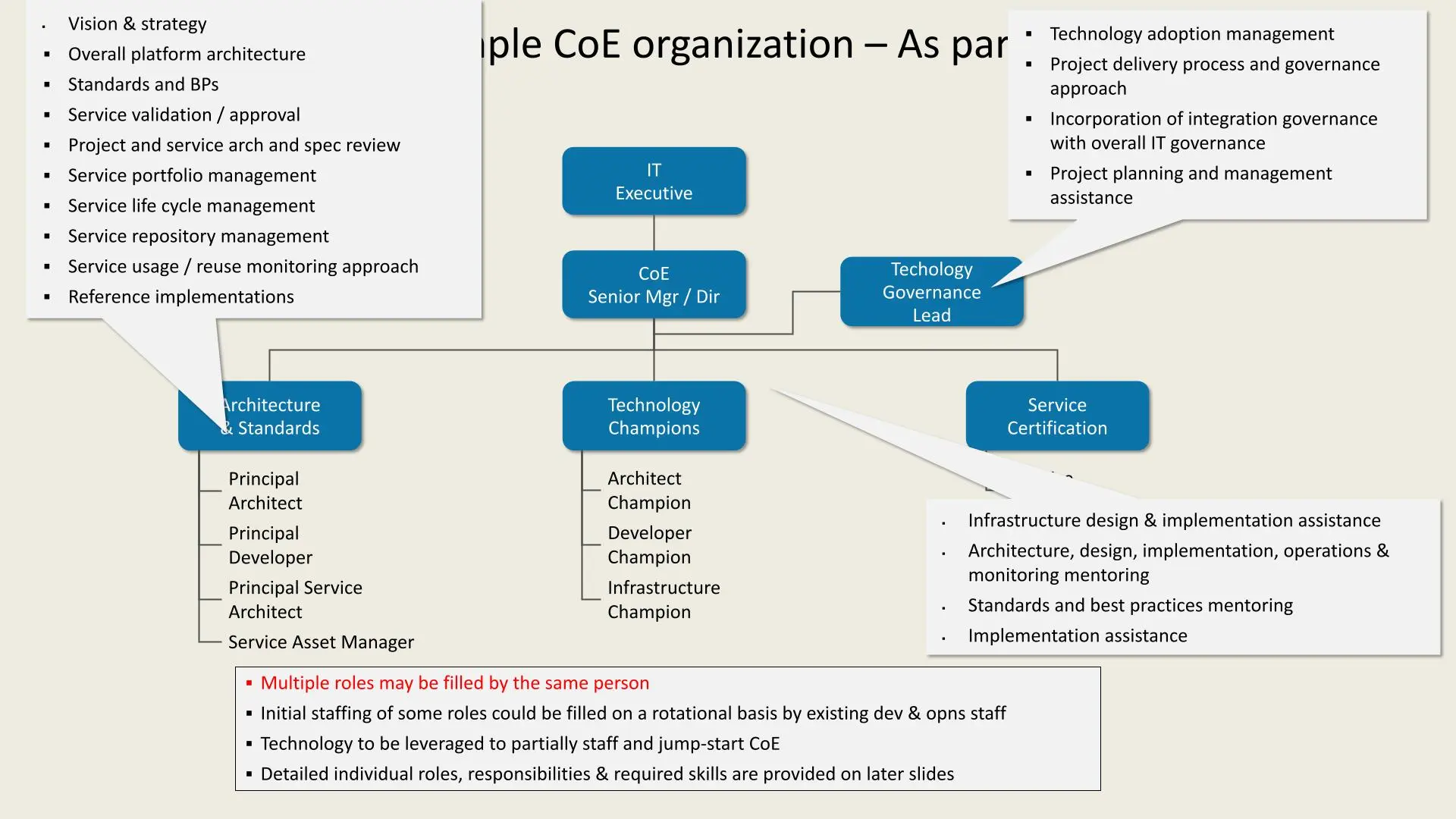

An example CoE (Center of Excellence) organization within an Enterprise Architecture (EA) framework:

- IT Executive oversees the CoE Senior Manager/Director.

- Technology Governance Lead handles technology adoption, project governance, and planning assistance.

- Architecture & Standards defines vision, platform architecture, standards, and service management. Key roles include Principal Architect, Developer, Service Architect, and Asset Manager.

- Technology Champions focus on specific areas: Architect Champion, Developer Champion, and Infrastructure Champion.

- Service Certification provides infrastructure, architecture, and implementation support, ensuring standards and best practices.

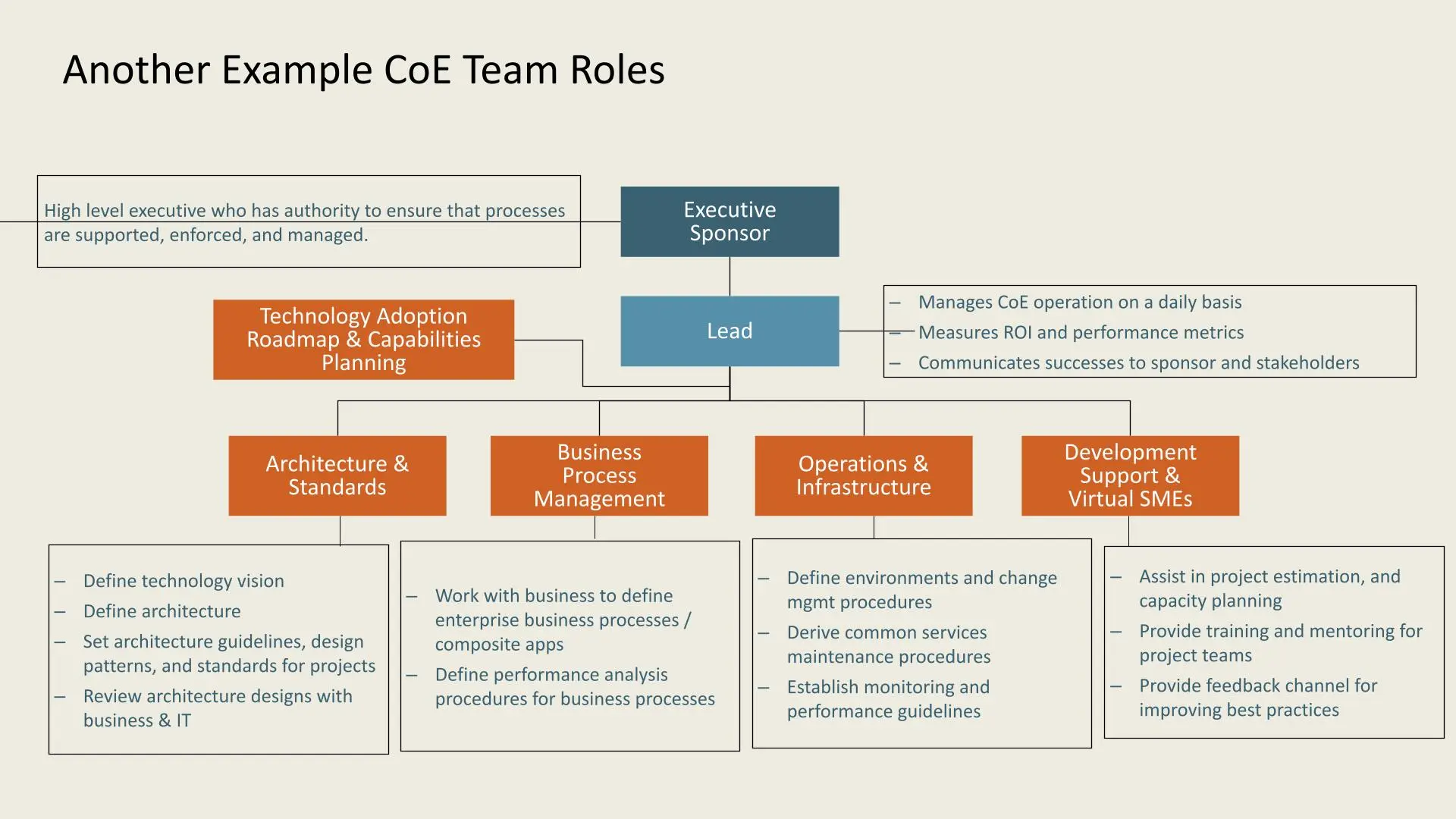

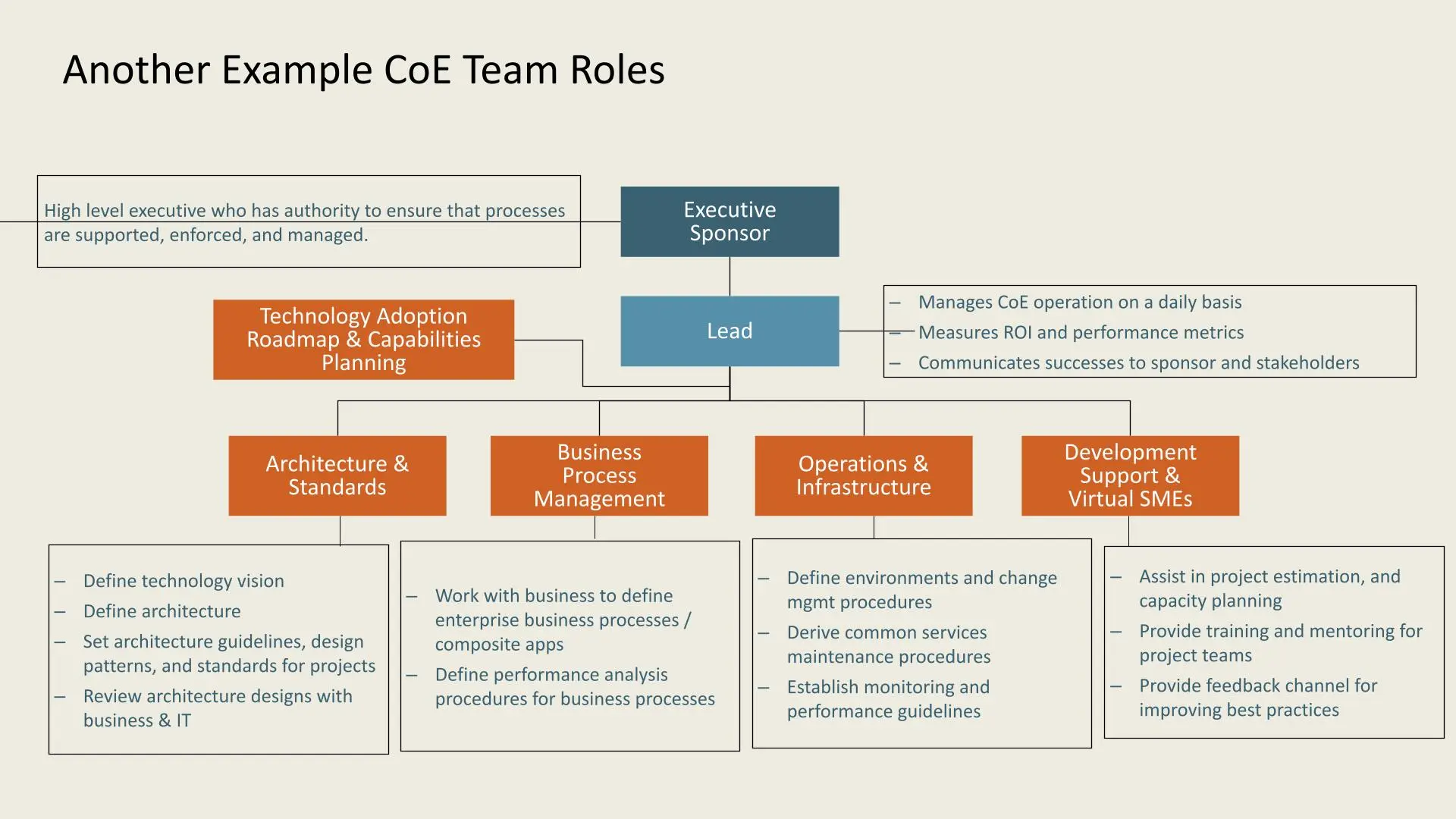

This example outlines key roles in a CoE team structure:

- Executive Sponsor: Ensures process support, enforcement, and management.

- Lead: Oversees daily CoE operations, measures ROI, and communicates achievements.

**Functional areas include:

- Technology Adoption Roadmap & Capabilities Planning

- Architecture & Standards: Defines technology vision, architecture, and standards.

- Business Process Management: Aligns with business to define processes and performance analysis.

- Operations & Infrastructure: Manages environments, maintenance, and performance guidelines.

- Development Support & Virtual SMEs: Provides project support, training, and feedback for best practices.

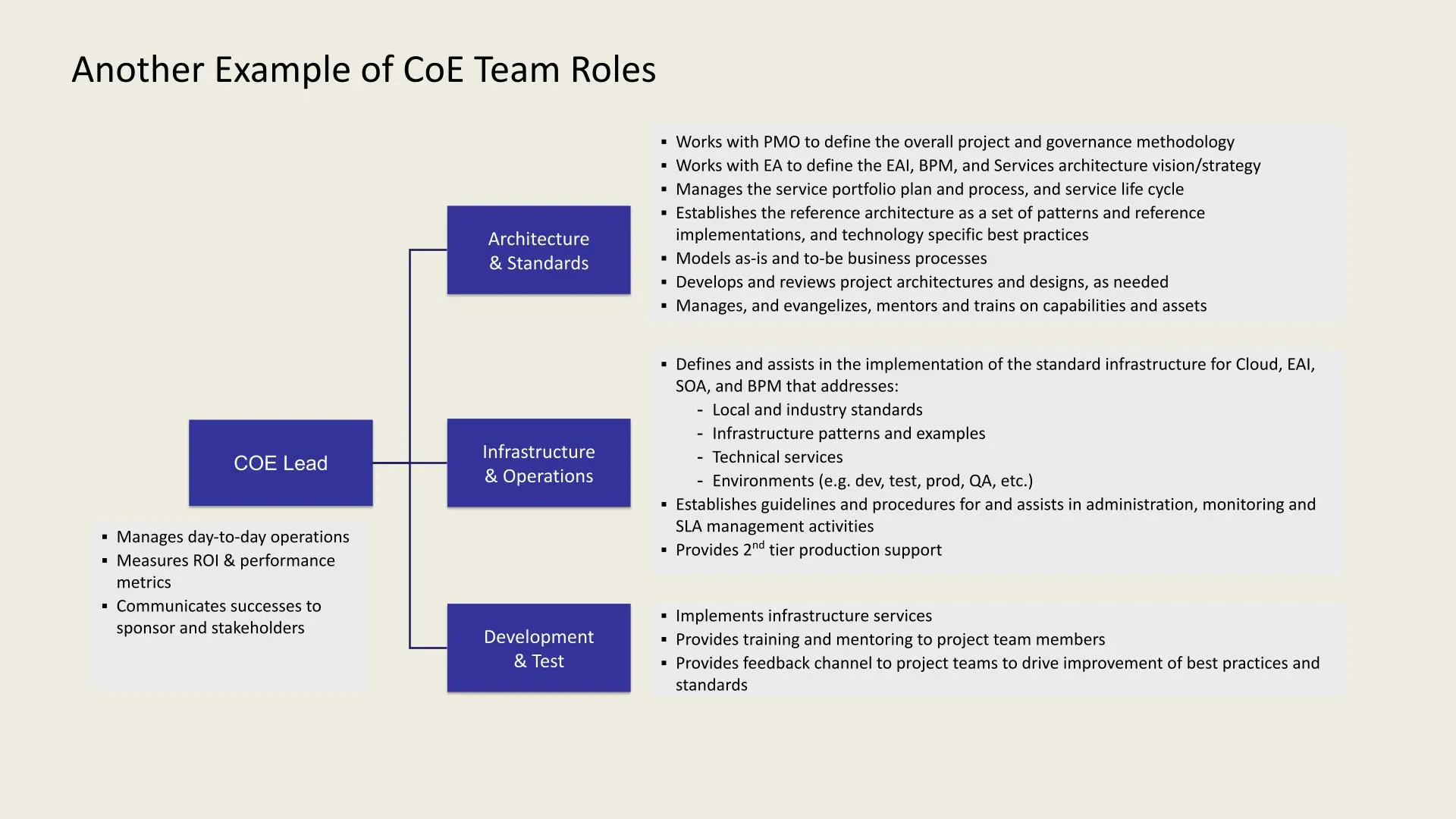

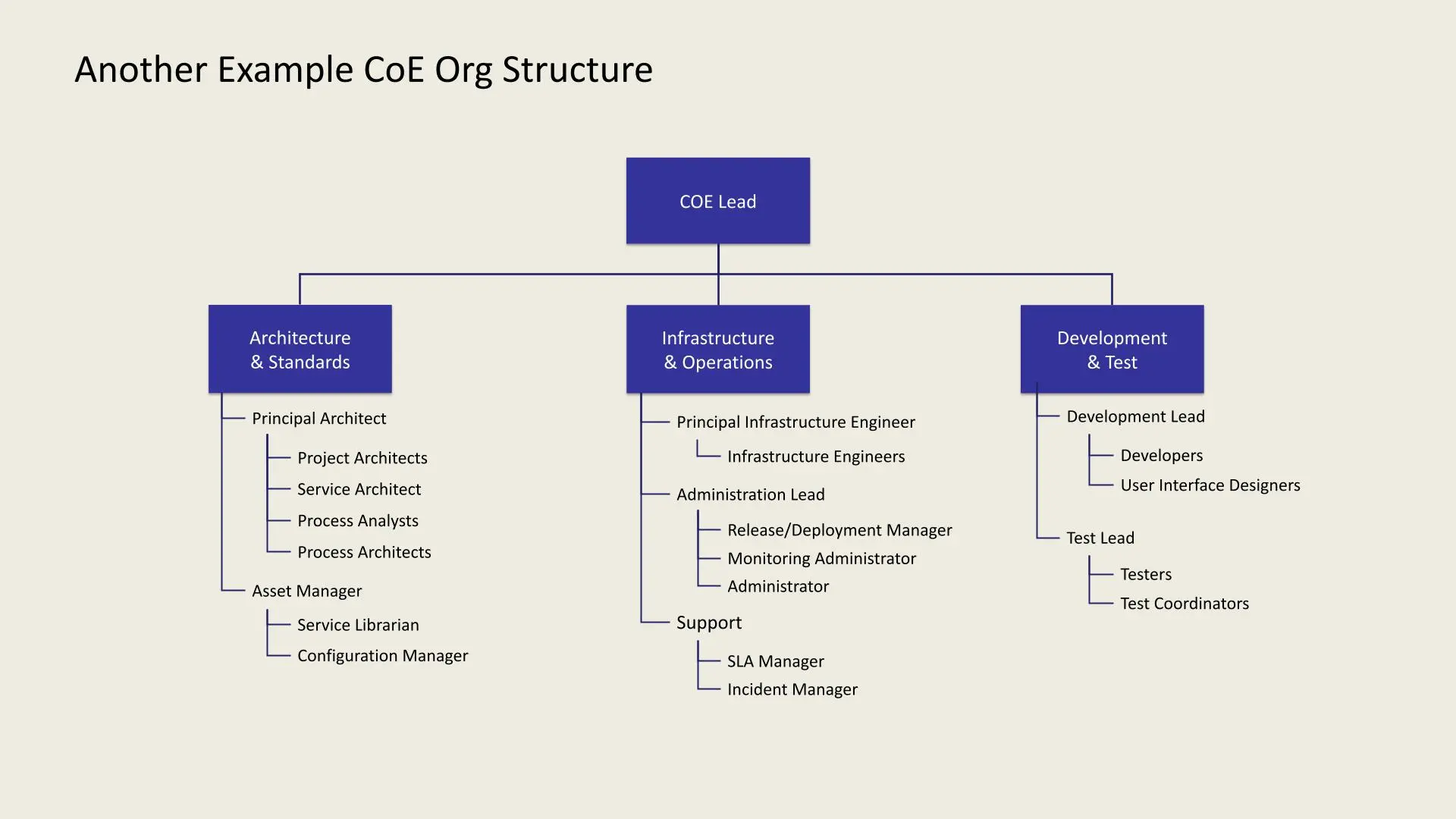

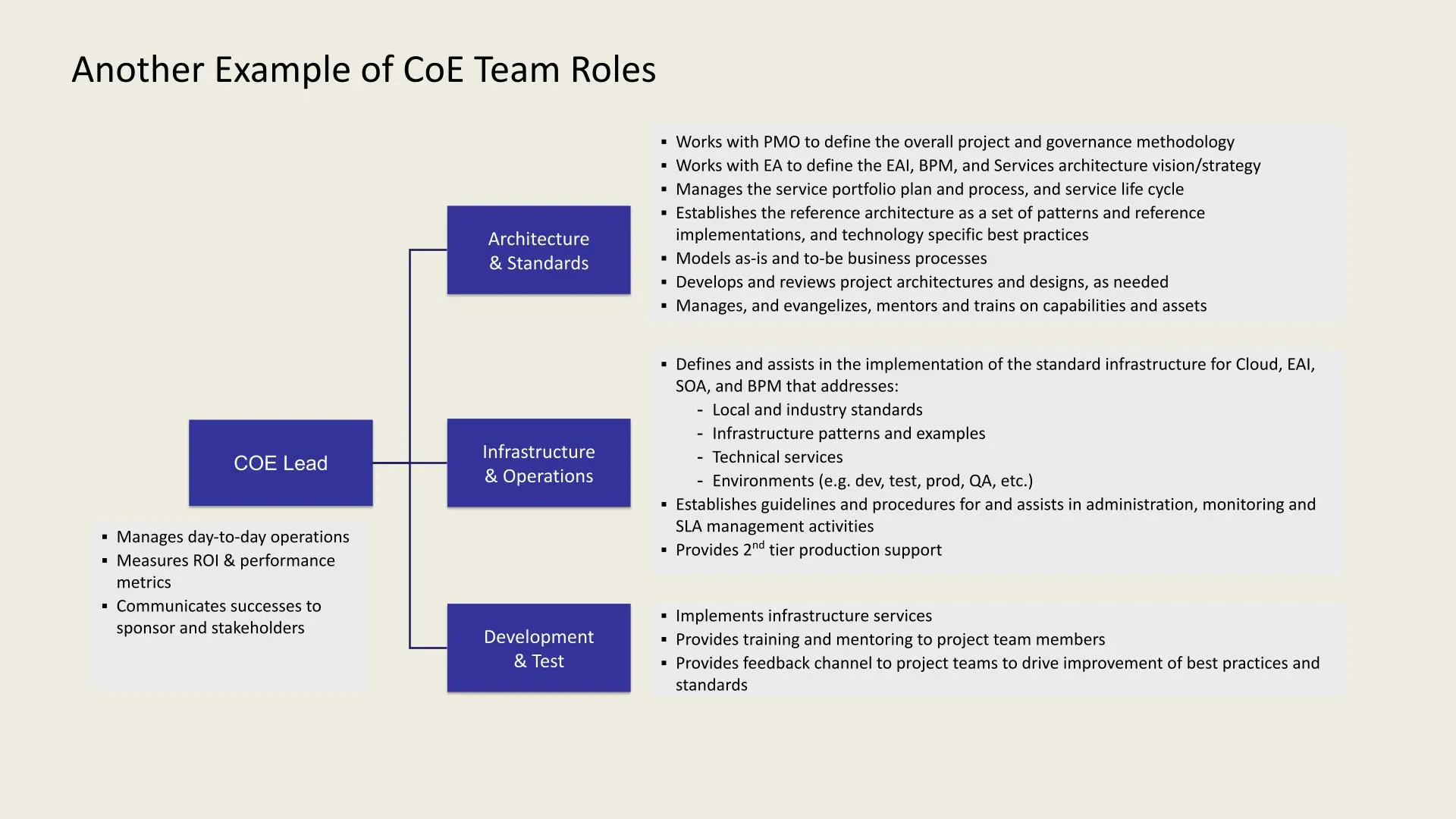

Another example outlines key roles in a CoE team structure:

- CoE Lead: Oversees daily operations, tracks ROI and performance, and communicates results to stakeholders.

- Architecture & Standards: Collaborates with PMO and EA, manages service portfolio, sets architecture standards, models business processes, and provides training.

- Infrastructure & Operations: Defines infrastructure standards, manages environments (e.g., dev, test, prod), handles administration, monitoring, SLA management, and provides second-tier support.

- Development & Test: Implements infrastructure services, provides team training, and facilitates feedback for standards improvement.

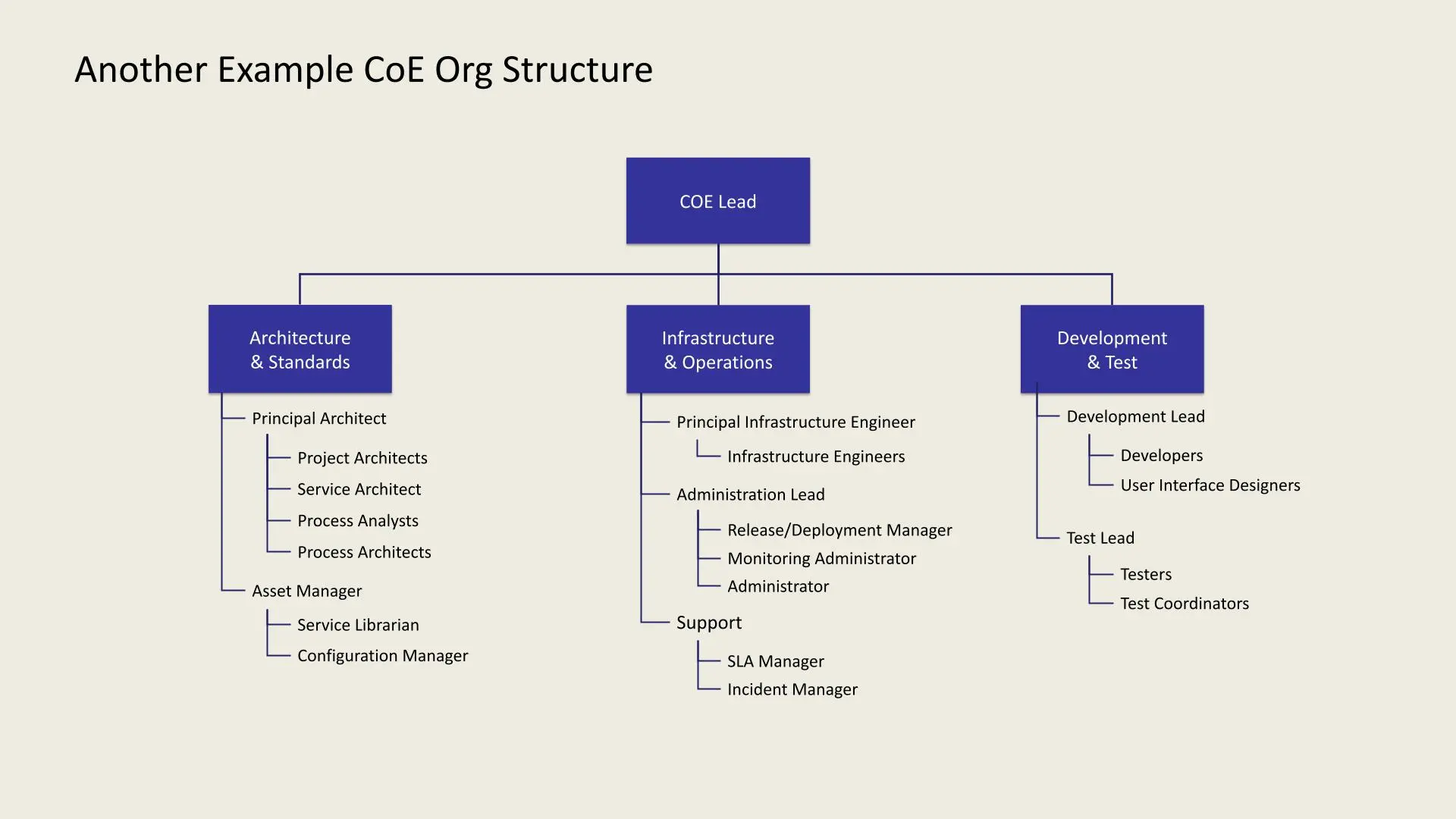

Another example outlines key roles in a CoE team structure:

- CoE Lead: Oversees all divisions.

- Architecture & Standards: Led by a Principal Architect, includes Project Architects, Service Architect, Process Analysts, Process Architects, Asset Manager, Service Librarian, and Configuration Manager.

- Infrastructure & Operations: Led by a Principal Infrastructure Engineer, includes Infrastructure Engineers, Administration Lead, Release/Deployment Manager, Monitoring Administrator, Administrator, SLA Manager, and Incident Manager.

- Development & Test: Led by a Development Lead and Test Lead, includes Developers, UI Designers, Testers, and Test Coordinators.

Sample IT Metrics for Evaluating Success

Service/Interface Development Metrics:

- Cost and time to build

- Cost to change

- Defect rate during warranty

- Reuse rate

- Demand forecast

- Retirement rate

Operations & Support Metrics:

- Incident response and resolution time

- Problem resolution rate

- Metadata quality

- Performance and response times

- Service availability

- First-time release accuracy

Management Metrics:

- Application portfolio size

- Number of interfaces and services

- Project statistics

- Standards exceptions

- Staff certification rates

The Delivery Approach involves the following steps:

.webp)

.webp)

- Start: Kick-off with Executive Sponsor.

- Understand Landscape: Assess current and future state.

- Architecture Assessment: Create an assessment report.

- Identify Priorities: Define technical foundation priorities and deliverables.

- Develop and Execute Plan: Formulate and execute the technical foundation development plan, covering architecture, development, infrastructure, and common services.

- CoE Quick Start: Establish organization, process, governance, CoE definition, and evolution strategy.

- Follow-On Work: Conduct additional work as per the high-leThe Path: Today vs Long Term Focusvel program plan.

The Path: Today vs Long Term Focus

The Path: Today vs Long Term| Focus | Today: Project Focus | Long Term: Enterprise Focus |

|---|

| Architecture | Enterprise and project architecture definition | Enterprise architecture definition; project architect guidance, training, review |

| Design | Do the design | Define/teach how to design |

| Implementation | Do the implementation | Define/teach how to implement |

| Operation | Assist in new technology operation | Co-develop operational best practices |

| Technology Best Practices | Climb the learning curve | Share the knowledge (document, train) |

| Governance | Determine appropriate governance | See that governance practices are followed |

| Repository | Contribute services and design patterns | See that services and design patterns are entered |

Key Elements of an Effective E-Strategy

An E-Strategy is essential for leveraging technology and improving operations in todays fast-paced business environment. Here is a concise roadmap:

Evangelize

- Business Discovery: Work closely with stakeholders to align E-Strategy with business needs.

- Innovate: Define an ideal future state with next-gen tech and real-time data advantages.

- Proof of Concept (POC): Test ideas in a sandbox, demo successful ones, and shelve unsuccessful ones to save resources.

Common Services

- Standardization: Establish reusable services with thorough documentation for easier onboarding and efficient project estimates.

Evolve

- Adaptability: Streamline architecture, operations, and infrastructure for flexibility and quick delivery.

- Automation: Use dynamic profiling, scalability, and automated installation to expedite deployments.

Enforce

- Standards and Governance: Implement best practices, enforce guidelines, and establish a strong governance structure with sign-offs on key areas.

- Version Control and Bug Tracking: Maintain organized development processes to prevent errors and ensure consistency.

Escalate

- Project Collaboration: Negotiate with project teams, aligning their needs with CoE standards.

- Ownership: CoE can guide or own infrastructure activities, balancing governance with flexibility.

Additional Considerations

Evolve standards as each project progresses, making the strategy adaptable and cost-efficient while yielding ROI.

Conclusion

A Center of Excellence is an invaluable asset for organizations navigating technological transformation. By centralizing knowledge, enforcing standards, and promoting continuous learning, a CoE enables businesses to stay competitive and agile.

Choosing the right CoE model and implementing it thoughtfully allows organizations to leverage the expertise of cross-functional teams, fostering a culture of collaboration, innovation, and excellence. Whether its through a centralized, distributed, or highly distributed model, the ultimate goal is the same: to empower teams, streamline processes, and drive sustainable growth.

Please reach out to us for any of your cloud requirements

.webp)

.webp)